Written by: Michael Mozer, Scientific Advisor at AnswerOn and Professor at the University of Colorado

Although neural networks have been around for sixty years (von Neumann, 1958), a seminal research paper appeared thirty years ago that revolutionized the field. The paper was called Learning representations by back-propagating errors (Rumelhart, Hinton, & Williams, 1986). In this post, I’ll explain what “learning representations” means and why this idea is so central to neural networks.

Let’s discuss a neural network that recognizes hand printed digits.

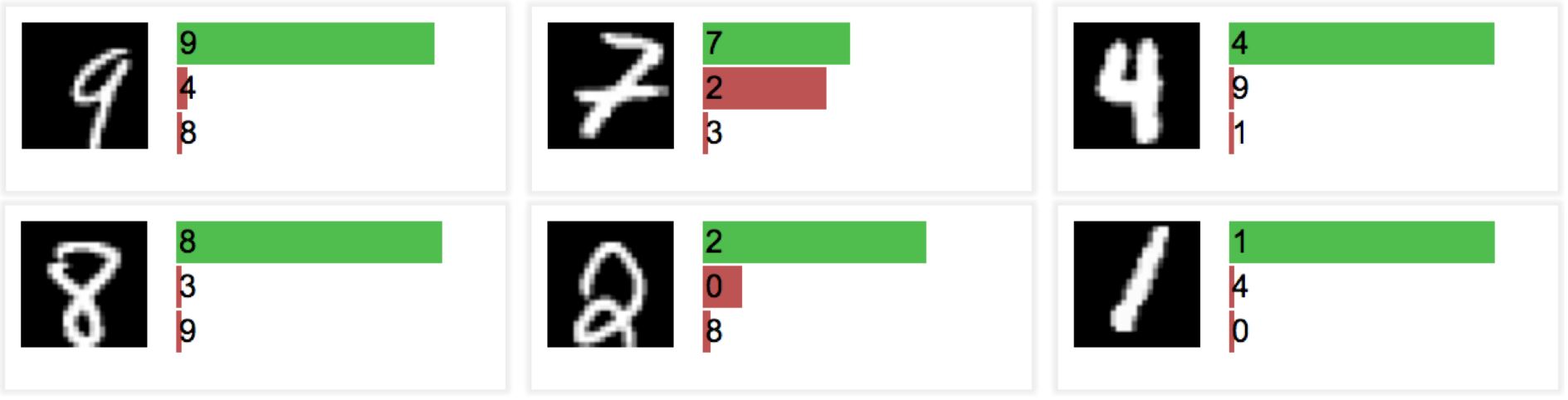

The neural net is given images like the ones here. (These examples come from some very cool neural net software you can run on a web browser by Andrej Karpathy.) Beside each digit is the neural net’s guess about the digit classification. For example, the hand printed 7 in the center is correctly identified by the neural net as a 7 but the net thinks it might also be a 2 or possibly a 3.

The input to this neural network is a representation (or encoding) of the image. The image is a grid of 24×24 pixels, and each pixel has an intensity level ranging from 0 to 1 (dark to light). As far as the neural net is concerned, each image is just a list of 576 (= 24×24) numbers. The output produced by the neural network is a representation of the character in the image (“0”, “1”, “2”, …, “9”). This representation consists of 10 confidence levels, one for each of the possible characters. The length of a green or red bar in the figure above indicate the confidence level for a character. Green is correct; red is an error.

One way to conceptualize the operation of the neural net is that it is transforming representations―from the 576 pixel intensities to the 10 confidence values. For easy problems, directly transforming input representations to output representations is sensible. But for difficult problems, intermediate representations are useful. For example, suppose we extracted loops and strokes from the image and came up with an intermediate representation that codes features such as:

- Is there a loop on top? (The loop should be present for an 8 or a 9.)

- Is there a loop on the bottom? (The loop should be present for a 2, 6, or 8.)

- Is there a horizontal stroke in the center? (The bar should be present for 3, 4, 5, and some 7’s.)

- Is there a vertical stroke in the center? (The stroke should be present for some 1’s, 4’s, and 9’s.)

The value of this intermediate representation is that it allows the net to make sensible guesses about the character identity. In contrast, the input representation is not helpful: knowing that the pixel in the image center has intensity level .75 tells you little about the identity.

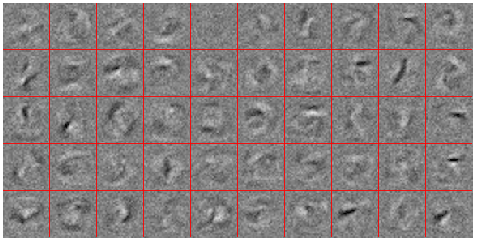

Below is a depiction of an intermediate representation learned by a neural net.

The red lines define a 5X10 grid. Each pattern within the grid specifies a detector for an intermediate feature. White and black indicate that the detector is looking for the presence or absence of ink in the input image. These patterns are a bit spooky, but you should be able to see strokes and loops. What may be most distinctive in the patterns is that often the intermediate representation is defined by the absence of ink, or absence of ink next to presence of ink which defines an edge. Interestingly, when neuroscientists determine the patterns detected by actual neurons in visual cortex, they have qualitatively similar properties.

The intermediate representation is sometimes referred to as a hidden representation, and the neurons detecting the above patterns are often referred to as hidden units or collectively as a hidden layer. The term ‘hidden’ comes from the fact that the representation is hidden between the input and the output. For a long time, it was believed that a single hidden layer was sufficient for almost all problems, but recently researchers have made the surprising finding that more hidden layers was better for a wide range of problems. Essentially, more hidden layers means more stages in the transformation from input representations to output representations. A neural net with many stages is a deep architecture, and training this network to determine the intermediate representations is called deep learning. In subsequent posts, I’ll explain why it’s surprising that deep learning works, and explain techniques that AnswerOn uses to make it work even better.

It’s an interesting but challenging task to understand the internal representations of a deep network, and I show you a couple of examples below if you’d like to explore, but I wanted to discuss internal representation in networks whose inputs are more abstract. When the input is an image, the internal representations can always be interpreted in visual terms. But AnswerOn typically has a very different kind of input.

Consider a problem that AnswerOn faces: Predicting whether an agent at a call center will terminate employment.

The input representation includes features like “how long has the agent been with the company” and “does the agent work in call center 473”. In this case, the internal representations with be useful ways of grouping and splitting the input features. For example, suppose that employees at call centers 473 and 128 tend to behave very similarly, perhaps because the supervisor at these facilities is very strict. An intermediate feature might then detect whether the employee is at one of these two facilities. Or suppose that certain employees respond well to strict supervision, say employees who have not been with the call center for very long. Then an intermediate feature might detect the conjunction of the employee having a short time of employment and also working at one of the call centers with a strict supervisor. The reality of the representations discovered by a neural net is that they are always more subtle and graded than these simple illustrations suggest. AnswerOn places much effort in understanding the operation of a neural net because doing so allows us to suggest new input features that should be incorporated into the models and which features are unnecessary and can be dropped (thereby saving effort and expense in data collection).

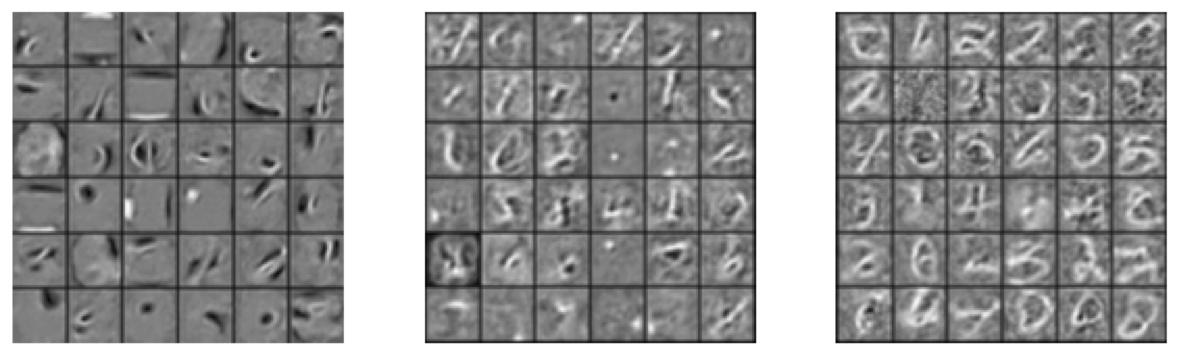

Let me close by showing you a few more examples of representations discovered by neural nets used for image processing. The first example is a digit-recognition network with three hidden layers by Erhan et al (2009). Each block represents one layer, and each layer has 36 features, laid out in a 6×6 grid. The network detects strokes and lines in the first layer, but then assembles them into complete or partial digits in the higher layers.

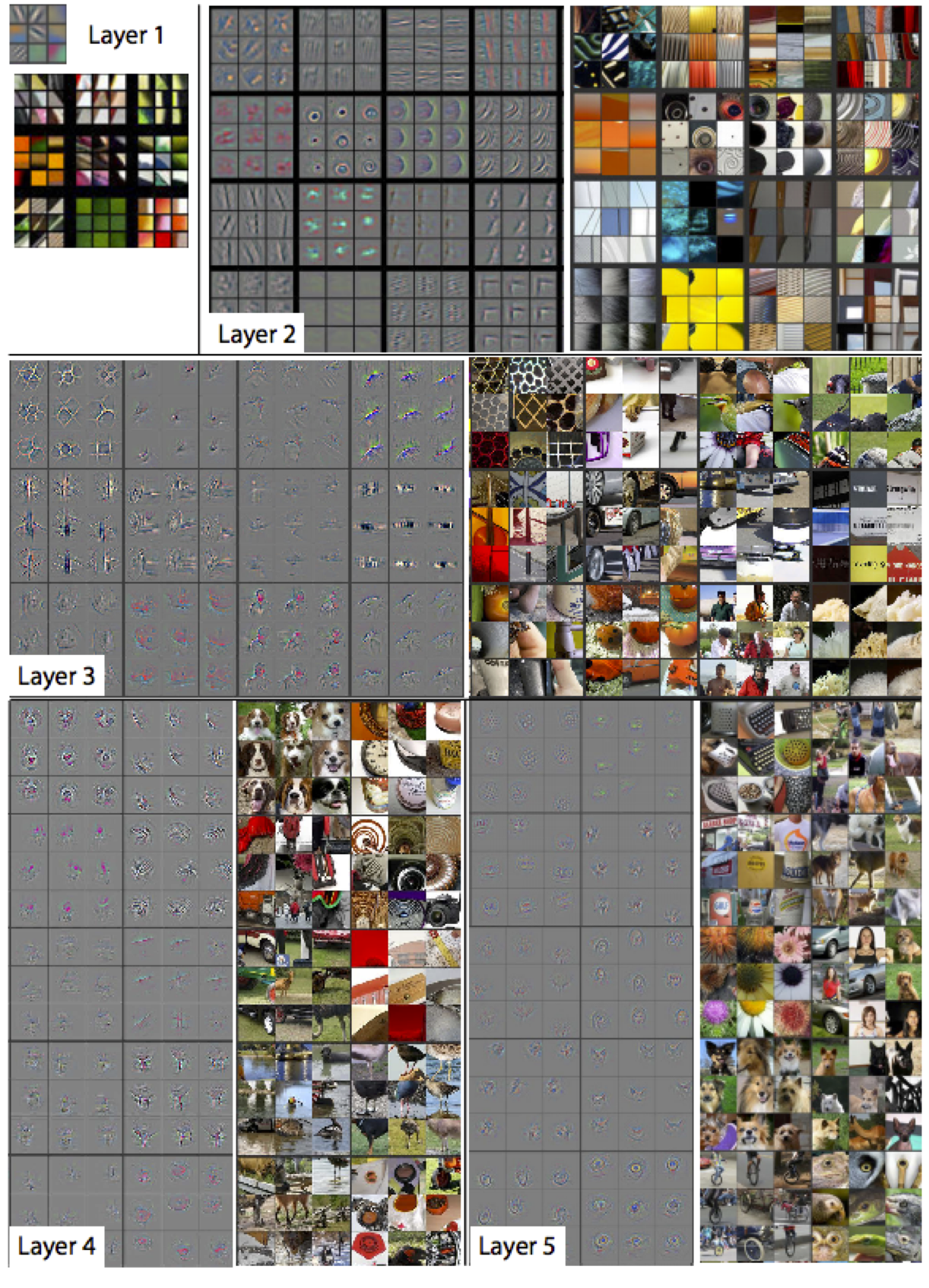

The internal representations are even more interesting when a neural network is trained on natural, color images. Below is a result from Zeiler and Fergus (2014) showing 5 layers of representation transformation. Detectors in layer 1 are looking at small patches of an image, not the whole image. There are 9 detectors for layer 1, shown with the grey background. The net is detecting edges and lines. Below the detectors are sample patches of the image that trigger the corresponding detectors to respond. Looking at these image patches in each successive layer suggests that the detectors are responding to more and more complex features of images. By the 5th layer, the detectors seem to be discriminating objects. For example, one detector responds strongly to dogs, another to wheels, another to owl eyes.

References

Erhan, D., Bengio, Y., Courville, A., & Vincent, P. (2009). Visualizing higher-layer features of a deep network. Technical Report 1341. Montreal: Department of Computer Science, University of Montreal.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323, 533-36.

von Neumann, J. (1958). The computer and the brain. New Haven: Yale University Press.

Zeiler, M. D., & Fergus, R. (2014). Visualizing and understanding convolutional networks. Lecture Notes in Computer Science Volume 8669 (pp. 818-833). Springer.